The Forward-Forward

Algorithm

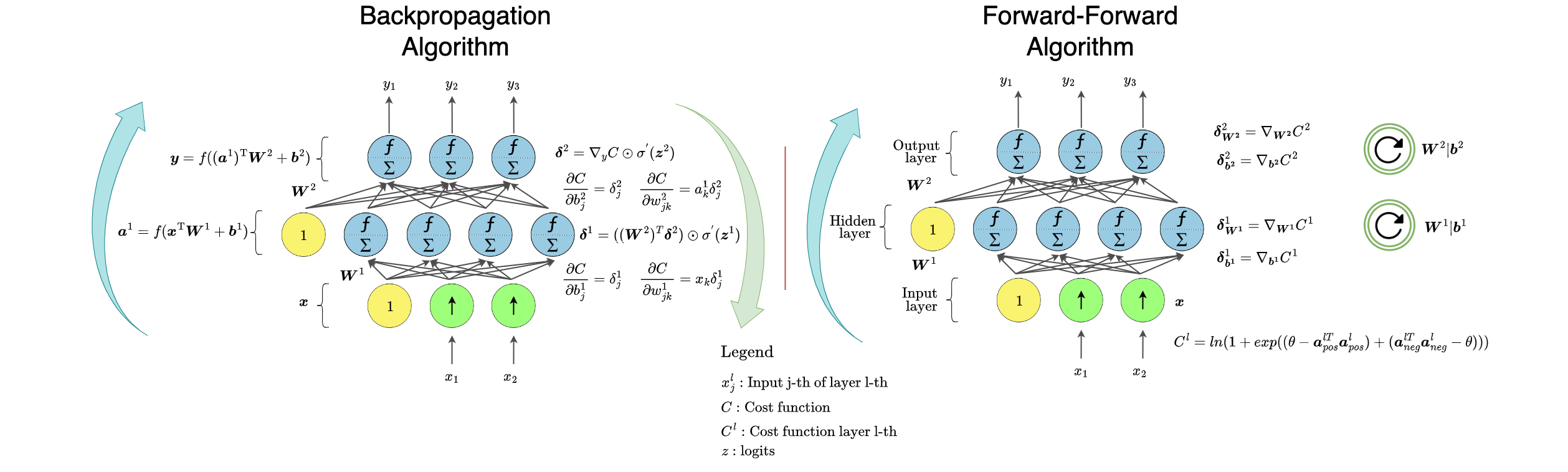

Welcome to a new and quick blog on the Forward-Forward learning procedure for neural networks! Hinton introduced in this paper a new approach for training neural networks that involves using two forward passes instead of the traditional forward and backward passes of backpropagation.

The Forward-Forward algorithm involves using positive data (i.e. real data) in one forward pass, and negative data (which can be generated by the network itself) in the other forward pass. Each layer in the network has its own objective function, which is to have high “goodness” for positive data and low goodness for negative data. One advantage of this approach is that the negative passes can be done offline, which simplifies the learning process.

Overall, the Forward-Forward algorithm shows promise as a way to train neural networks effectively on small problems, and warrants further investigation. So, why don’t try to experiment with it?